From EETimes: Micron unveiled what it claims is a fundamentally different new processor architecture at Supercomputing 2013 that speeds up the search and analysis of complex and unstructured data streams. The sneak peak of its Automata Processor (AP) architecture was accompanied by the establishment of a Center for Automata Computing at the University of Virginia.

In an interview from the conference floor via telephone, Micron’s director of Automata Processor technology development Paul Dlugosch told EE Times that Automata is different than conventional CPUs in that its computing fabric is made up of tens of thousands to millions of processing elements that are interconnected. Its design is based on an adaptation of memory array architecture, exploiting the inherent bit-parallelism of traditional SDRAM.

“Many of the most complex, computational problems that face our industry today require a substantial amount of parallelism in order to increase the performance of the computing system,” said Dlugosch.

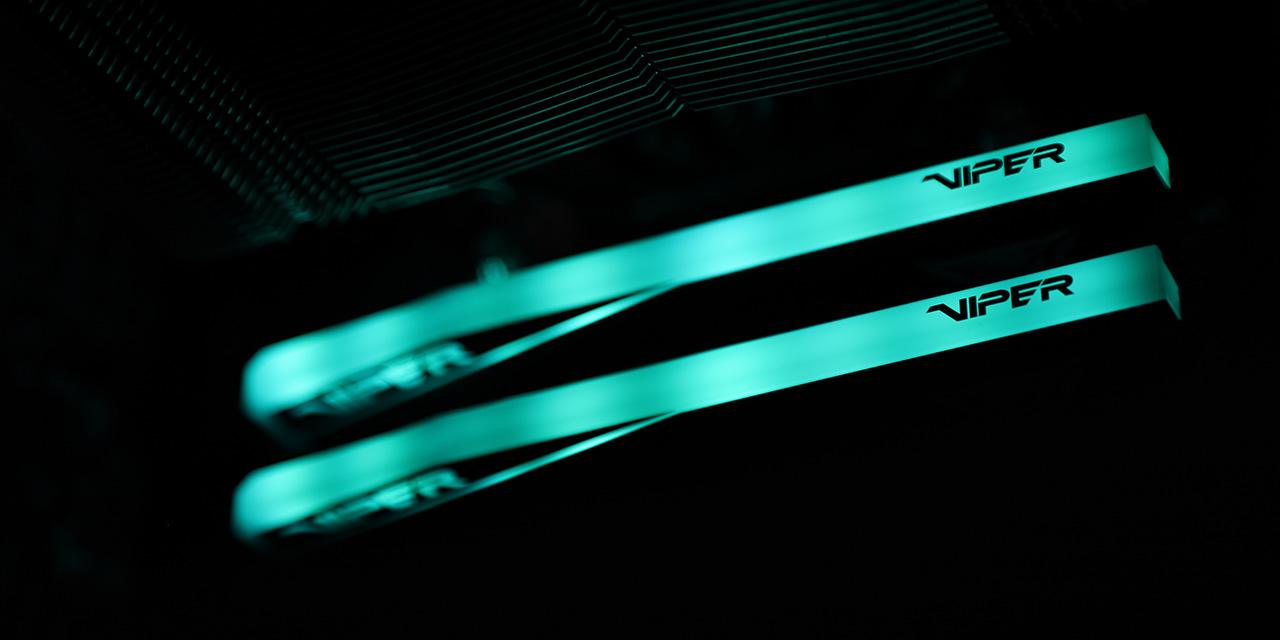

Conventional SDRAM is organized into a two-dimensional array of rows and columns and accesses a memory cell for any read or write operation. The memory, said Dlugosch, is not used to store data; it is used to stream back analysis of data. The AP architecture uses a DDR3-like memory interface and will be made available as single components or as DIMM modules.

Micron will also make available graphic design and simulation tools and a software development kit (SDK) to help developers design, compile, test, and deploy their own applications. A PCIe board populated with AP DIMMs will be available to early access application developers so they can begin plug-in development of AP applications. Samples of the AP and the SDK will available in 2014.

Automata has been in development for seven years, spurned by customer requests for even faster speeds. Dlugosch said Micron decided it was time to take a different approach to solve problems consistently voiced by CPU vendors and OEMs: Memory is the bottleneck. This problem has been exasperated since the early days of big data in 2007, he said.

View: Article @ Source Site