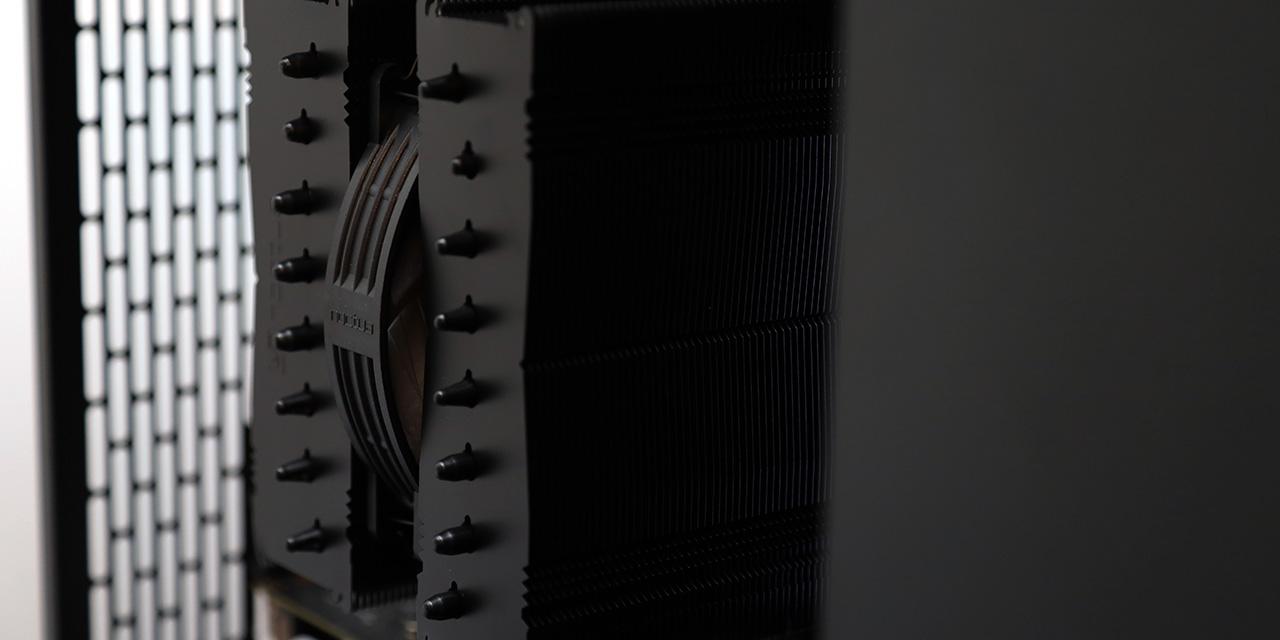

From ExtremeTech: Today Micron is announcing its newest version of high-bandwidth memory (HBM) for AI accelerators and high-performance computing (HPC). The company had previously offered HBM2 modules, but its newest memory technology is so advanced it's skipping right over first-generation HBM3 and calling it HBM3 Gen2. It claims to offer over 1.2TB per second of bandwidth, which would place it above its rivals, SK Hynix and Samsung, in the arms race over stacked memory bandwidth used in data center GPUs and SoCs.

In its announcement, the company claims its HBM3 Gen2 memory is the world's fastest and most efficient. It states its 24GB stack with eight layers offers 1.2TB/s of bandwidth via 9.2GB/s pin speed is up to 50% faster than its rivals' current HBM3 offerings. It also claims a 2.5X improvement in performance-per-watt over previous generation offerings for HBM memory. Overall, Micron says its newest technology is the industry leader in bandwidth, efficiency, and density.

Micron says the secret sauce in its newest HBM stacks is its 1β (1-beta) DRAM process node, which it says allows it to fit its 24Gb DRAM with eight layers into the existing HBM3 dimensions of 11mmx11mm, according to Tom's Hardware. This technically allows an 8-layer stack to go from 16GB of capacity and 819GB/s of bandwidth on a 1024-bit memory bus to 24GB and 1.2TB/s of bandwidth on Micron's HBM3 Gen2. Micron says it also has a 12-layer stack waiting in the wings that offers 36GB of capacity, and it'll be arriving in early 2024.

View: Full Article