Page 9 - Power Usage, Overclocking

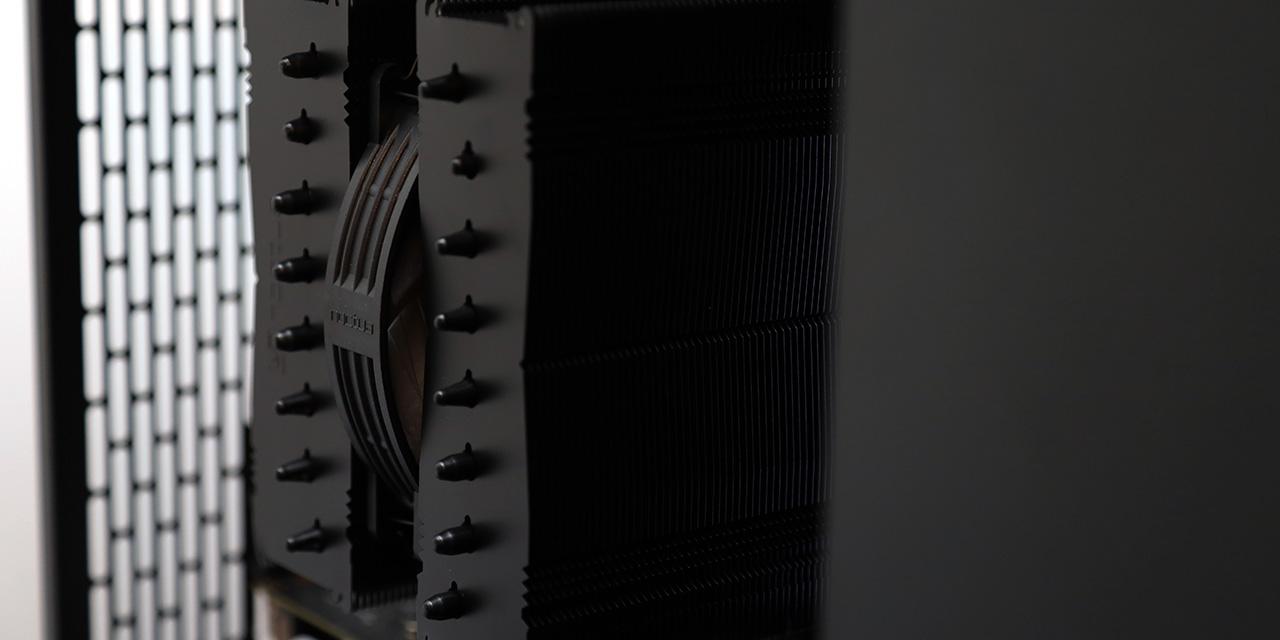

The G92 65nm core in the Gigabyte 8800GT is quite comparable to the other 8800GTs benchmarked, as we can see from our charts above. Please note that the load conditions cannot be measured against no graphics card since the entire system is under load, and as such the CPU which will not limit to only graphics card power consumption. However, these figures are accurate against each other besides the 'None' value as 129W as a place filler. With that in mind, the Gigabyte 8800GT's power consumption reached a maximum of 236W from 165W -- which is very good. Gigabyte likes to promote their lower RDS(on) MOSFET design, quality solid capacitors, and low power loss ferrite core design. It's reflected on the chart when the power consumption it's around 10W lower in comparison to the Asus 8800GT TOP that's based off the reference NVIDIA design and a couple watts lower than the 8800GT TurboForce, either it's the margin of error or non-overclocked status (But overclocking without voltage increase should not make a big difference).

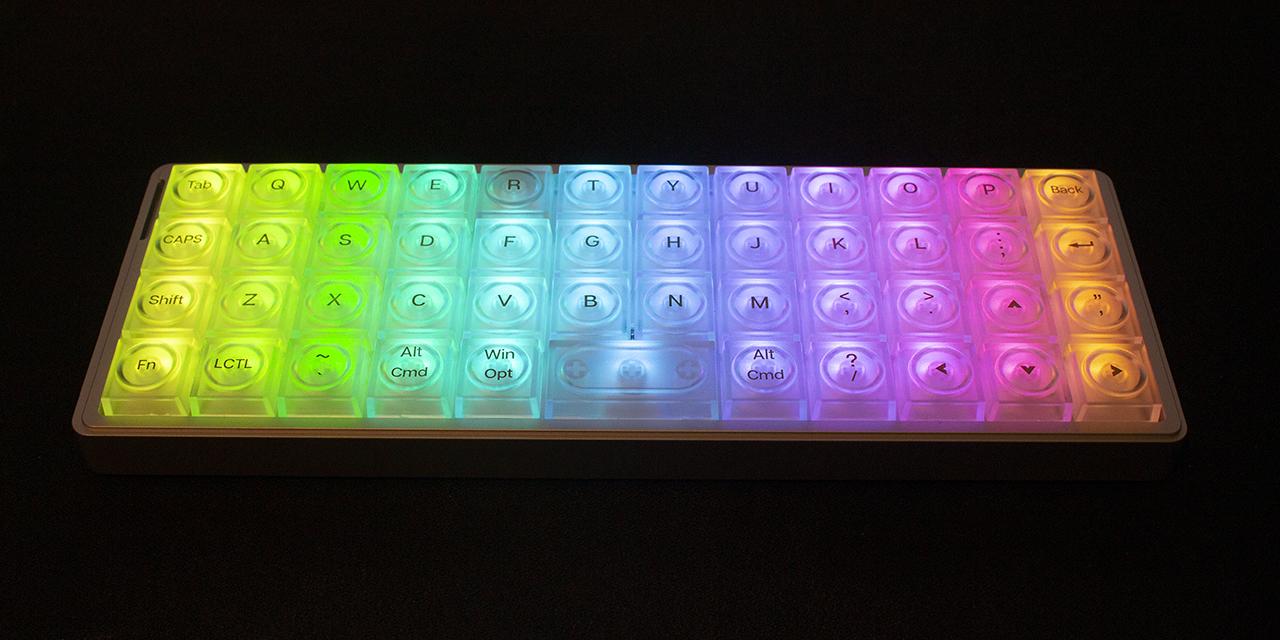

Using Gigabyte's Gamer HUD overclocking software, we've risen the voltage to 1.2V on the 9800GT 512MB to see what kind of results we can get. This piece of software from Gigabyte allows you to raise the voltage from 1.15V to 1.4V (Although I kept it at 1.2V since it didn't make much difference; not to mention voltages at that point could be dangerous -- additionally, there's no guarantee that what's indicated on screen is actually what's delivered to the card); with GPU, Shader, and Memory overclocking options as standard. On the side are two graphs to monitor GPU usage and temperature.

The maximum stable overclock we were able to attain for the core is 748MHz at 1.2V -- we've seen better for cards such as the 8800GT TurboForce with the same card go up to 820MHz, but either the TurboForce factory overclocked units are cream of the crop or this one is just not really good at overclocking. That's still a pretty good 24.7% overclock from stock.

While the stock shader clock is designed to run at 1.5GHz, and referencing the 8800GT TurboForce it didn't take me all too long to find its maximum shader clock -- besides the time required to restart the computer when it keeps crashing, of course. The 8800GT TurboForce's maximum went to 2.12GHz, but the 9800GT 512MB followed close at 1.99GHz -- an impressive 34.3% overclock.

NVIDIA defines the minimum memory speed at 900MHz for reference NVIDIA designs. The Gigabyte GeForce 9800GT's Samsung memory chips are actually designed to run at 1GHz, but in reality it's only 900MHz (NVIDIA spec) out of the box. The maximum overclock we were able to attain was 1140MHz actual (2280MHz effective), or 140MHz over Samsung specs (+6%), or 27% faster than stock 8800GT cards. It uses identical chips as the Gigabyte 8800GT TurboForce -- but as they all say, different chips overclocks differently.

Generally speaking, our specific unit's core and shader didn't overclock too impressively, but the memory overclock we were able to achieve is pretty dang good.

Page Index

1. Introduction, Specifications, Bundle

2. NVIDIA 9800GT Architecture

3. A Closer Look, Test System

4. Benchmark: FEAR

5. Benchmark: Prey

6. Benchmark: Half Life 2: Lost Coast

7. Benchmark: CS:Source HDR

8. Benchmark: 3DMark06

9. Power Usage, Overclocking

10. Noise Factor and Conclusion